By Jan Ozer

By Jan OzerVP8 is now free, but if the quality is substandard, who cares? Well, it turns out that the quality isn't substandard, so that's not an issue, but neither is it twice the quality of H.264 at half the bandwidth. See for yourself.

[In response to reader questions and comments, this article was updated at 6:20 a.m. on Monday, May 24. See author's comments at the end of the article.—Ed]

VP8 is now free, but if the quality is substandard, who cares? Well, it turns out that the quality isn't substandard, so that's not an issue, but neither is it twice the quality of H.264 at half the bandwidth. See for yourself, below.

To set the table, Sorenson Media was kind enough to encode these comparison files for me to both H.264 and VP8 using their Squish encoding tool. They encoded a standard SD encoding test file that I've been using for years. I'll do more testing once I have access to a VP8 encoder, but wanted to share these quick and dirty results.

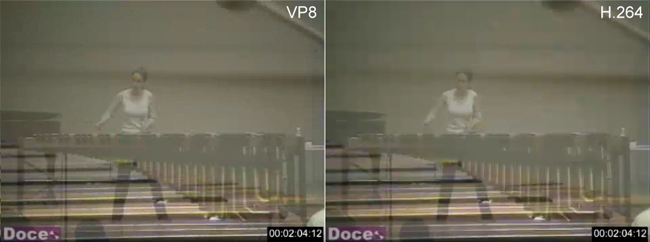

Here are the specs; VP8 on the left, H.264 on the right:

You can download and play the files themselves, though you'll need to download a browser from http://www.webmproject.org/users/ to play the webm file. Click here to download the H.264 file, and here for the VP8 file.

What about frame comparisons? Here you go; you can click on each to see a larger version that reveals more detail.

Low motion videos like talking heads are easy to compress, so you'll see no real difference.

In another low motion video with a terrible background for encoding (finely detailed wallpaper), the VP8 video retains much more detail than H.264. Interesting result.

Moving to a higher motion video, VP8 holds up fairly well in this martial arts video.

In higher motion videos, though, H.264 seems superior. In this pita video, blocks are visible in the pita where the H.264 video is smooth. The pin-striped shirt in the right background is also sharper in the H.264 video, as is the striped shirt on the left.

In this very high motion skateboard video, H.264 also looks clearer, particularly in the highlighted areas in the fence, where the VP8 video looks slightly artifacted.

In the final comparison, I'd give a slight edge to VP8, which was clearer and showed fewer artifacts.

What's this add up to? I'd say H.264 still offers better quality, but the difference wouldn't be noticeable in most applications.

Update

We've received some comments about the comparisons, and I wanted to address them en masse.

In my haste to post the article, I didn't use the exact same frames in the comparison images. Though the frames I used accurately showed comparative quality, we've updated the images to include the same frames—a better presentation with the same conclusion. In defense of this quick and dirty analysis, we did include links to the encoded source files with the original article, so anyone wishing to dig a bit deeper could certainly have done so and still can (H.264 here; VP8 here). Still, we should have updated to the identical frames sooner.

Some readers have questioned why we used the baseline H.264 profile and the MainConcept codec instead of x264 for our H.264 encoding. As stated in the article, the VP8 and H.264 files were encoded by Sorenson Media using its Squish tool, since Sorenson had been apparently been working with Google for some time to get the tool up and running, and could produce the VP8 and H.264 encoded files soon after Google made the WebM announcement. Lots of websites use Squish, and even more use Squeeze; I was comfortable that their encoding of both formats would be representative of true quality. We used non-configurable Squish presets in the interest of time, so couldn't change the profile from baseline to main, and obviously couldn't substitute x264 for the MainConcept codec that Sorenson deploys.

In my tests, MainConcept has been a consistent leader in H.264 quality, and is certainly a very solid choice for any commercial encoding tool. We have an x264 evaluation on the editorial calendar—I know it's quite good, and I look forward to seeing how it stacks up with others in terms of quality and downstream compatibility. I didn't ask Sorenson how long it took to encode the files, but Google's FAQ does indicate that encoding can be quite slow at the highest quality configurations, though they're working to optimize that.

As I said in the article, "I'll do more testing once I have access to a VP8 encoder, but wanted to share these quick and dirty results." So, hang on for a few weeks, and I'll try to get the next comparison right the first time.

Some other questions:

>> hmmm, do you think VP8 is trying to determine who the primary subject is, and then focusing on providing that region more detail? or it's redistributing the bits in a different way? maybe if you took a look at each frame, and see how much data is being given to each frame

I'm not aware of a tool that provides this information - if you know of one, please let me know.

>>I think it's a bit misleading to point out background flaws in h264 when the overall quality is higher.

The overall quality of that frame did not appear higher. I look at standard frames each time I compared encoding tools or codecs, I was simply passing along my observation. Not sure how that can be misleading.

>> only 24-bit PNG files are acceptable for frame comparisons

I've never seen a case where high quality JPEGs were not visually indistinguishable from PNGs, and they slow web page load times immensely, especially for mobile viewers. I'm open to reconsider this approach, though; if you want to take PNG frame grabs from the files, compare them to the JPEGs, and show that there's a difference, I'll post the PNGS.

>> The VP8 video is put in a WebM (which is just MKV) container and the H.264 video is put in a mp4 container. Not a big deal, but in a video codec comparison, it only makes sense to keep everything else constant. MKV is able to handle both formats, so why the difference?

This is pretty much irrelevant, and you could equally make the case that it's more accurate to display the H.264 file the way most viewers would actually watch them, which is to say, as H.264.

>> Why is the audio even included? Furthermore, why is the audio encoded differently? The fact that the two files had similar filesizes means nothing when you attach different audio streams.

Audio is included because I like to check synchronization at the end, and folks seem to prefer watching video with audio. You've no doubt noted that I made no audio related conclusions. Both audio streams are 64Kbps as reported by MediaInfo, so should't impact the data rate of the video file, or overall file size. This is also what Sorenson reported; if you have other information about the respective file size, please let me know.

The fact that one file is mono and the other stereo is irrelevant, particularly given the lack of audio-related conclusions and the fact (as far as I can tell) that the audio configuration had the same bit rate.

>>Finally, take a look at http://x264dev.multimedia.cx/?p=377

Very useful analysis, though I'm glad I focused on a more streaming-oriented configuration. While I wasn't aware of it when I wrote this article, Dan Rayburn let me know about it later that day and I blogged about it (and quoted it extensively) here later that day.

Sorry for any confusion, I'll try to do better next time.

Read More..